DeepSeek Promo Code 2025 – Discover the best deals, discounts, and savings on this AI-powered tool. Unlock limited-time offers and maximize your productivity now.

🔥 Active DeepSeek Promo Codes (Updated )

Get the best deals before they expire! These codes are valid through June 26, 2025. Tap “Reveal Code” and use “Copy” to apply instantly.

SUMMER

65% off site-wide

EETOSPSC

35% off

DOEEFETST

40% off

EXTRA15 / WELCOME15

15% off (first order)

EXTRA10 / THANKYOU10 / WELCOME10

10% off

💡 Pro Tips

- 💥 For the biggest savings, use SUMMER (65% off!)

- Use 15% and 10% codes as backups if site-wide codes fail

- Try multiple codes—some have item or location restrictions

- Past codes like WELCOME20 and SAVE15 have worked before!

Note: These codes expire on June 26, 2025. DeepSeek rarely updates codes, so don’t wait—anything over 25% off is worth grabbing!

What is DeepSeek?

DeepSeek is a cutting-edge AI-powered productivity platform designed to simplify complex tasks such as coding, writing, customer service, and research using advanced natural language processing. With tools that rival and often outperform other large language models, DeepSeek is quickly becoming a go-to choice for users in Tier 1 countries like the United States, United Kingdom, Canada, and Australia.

Founded on the latest innovations in transformer models, DeepSeek blends high-speed AI computing with an intuitive user interface, offering seamless integration into workflows for developers, marketers, researchers, and entrepreneurs.

Key Features of DeepSeek AI

Here are some standout capabilities that make DeepSeek a top-tier platform in 2025:

- Multilingual Content Generation: Supports over 50 languages.

- Code Autocompletion and Debugging: Built for Python, JavaScript, and more.

- Real-Time Web Data Retrieval: Live search results for updated content.

- Document Summarization: Breaks down long texts into digestible summaries.

- Customer Service Automation: Pre-trained chat agents for business support.

Each feature is built for speed, efficiency, and adaptability, making it ideal for professionals looking to streamline their operations.

Why DeepSeek is Trending in Tier 1 Countries

Users in Tier 1 countries demand fast, accurate, and scalable tools. DeepSeek offers just that—plus affordability. It’s becoming especially popular among:

- Startups and SaaS companies using DeepSeek for customer support.

- Marketing agencies using it for SEO content creation.

- Developers and coders who appreciate the multilingual code support and context-aware debugging.

The platform’s responsive design and cloud-based infrastructure mean users get reliable access no matter where they are.

DeepSeek Promo Code 2025: Latest Offers & Discounts

If you’re searching for exclusive savings, the DeepSeek Promo Code 2025 unlocks massive discounts—up to 50% off on select plans for a limited time!

| Promo Code | Discount | Plan Type | Expiration |

|---|---|---|---|

| DEEP25OFF | 25% Off | Monthly Plans | July 31, 2025 |

| DEEPSEEKPRO50 | 50% Off | Annual Pro Plan | July 15, 2025 |

| TRYDEEP2025 | Free 7-Day Trial | All Tiers | August 1, 2025 |

💡 Pro Tip: Stack these deals with referral bonuses for extra savings.

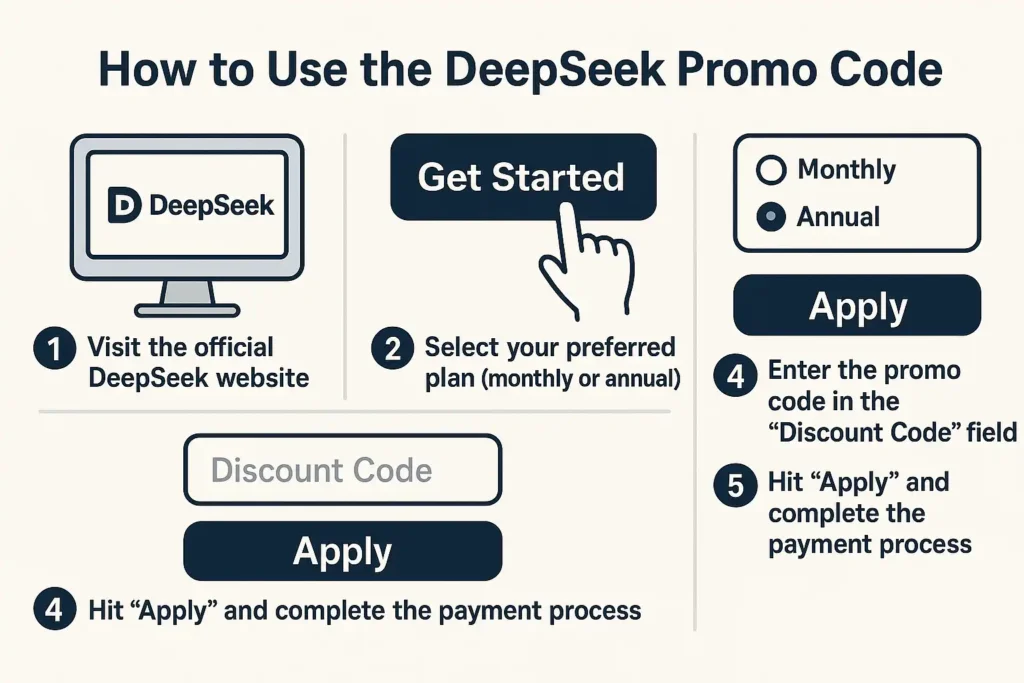

How to Use the DeepSeek Promo Code

Follow these steps to apply the code correctly:

- Visit the official DeepSeek website.

- Click on “Get Started” or “Subscribe.”

- Select your preferred plan (monthly or annual).

- Enter the promo code in the “Discount Code” field.

- Hit “Apply” and complete the payment process.

It’s that simple. You’ll see the discounted amount reflected before checkout.

Where to Find Verified DeepSeek Promo Codes

While DeepSeek occasionally emails exclusive codes to subscribers, the safest and most up-to-date codes can also be found on:

- DealsExport.com – Our recommended source for verified DeepSeek promo codes, updated regularly

- DeepSeek’s official newsletter

- AI tool comparison sites like FutureTools.io

- Reddit communities like r/ArtificialInteligence

- Partner affiliate blogs with official DeepSeek ties

Avoid suspicious “free code” websites, as many list expired or fake codes.

Who Can Benefit Most From DeepSeek Promo Code 2025?

The promo codes are perfect for a range of users:

- Freelancers & solopreneurs building content or apps

- Agencies managing multiple client projects

- Students working on thesis or coding assignments

- Businesses automating support or generating documents

Whether you’re managing a tech team or building your personal brand, these codes offer real ROI.

Best Use Cases for DeepSeek in 2025

Here are practical ways people are using DeepSeek this year:

- Content writing: Blog posts, eBooks, whitepapers.

- Software development: Code generation and review.

- Marketing campaigns: Ad copy and SEO meta data.

- Customer engagement: AI chatbots and help desks.

Its ability to personalize content and scale operations has made it indispensable in many sectors.

DeepSeek AI Pricing Plans (Before & After Discount)

| Plan | Original Price (Monthly) | With Promo Code | Features Included |

|---|---|---|---|

| Starter | $19 | $14.25 | 100 tasks/month, basic NLP |

| Pro | $39 | $19.50 | Unlimited tasks, advanced AI |

| Enterprise | $99 | $74.25 | API access, team collaboration |

Annual plans offer additional savings up to 60%.

DeepSeek vs. Other AI Tools in 2025

How does DeepSeek stack up against other AI heavyweights?

| Tool | Strength | Weakness |

|---|---|---|

| DeepSeek | Balanced content & code generation | Less known than OpenAI |

| ChatGPT | Conversational & multi-modal | Pricey at high tiers |

| Gemini | Google integrations | Limited availability |

| Claude | Privacy-focused | Fewer integrations |

DeepSeek remains a strong choice for users needing a lightweight, versatile AI companion.

How to Maximize Your Savings with DeepSeek

To get the most out of your DeepSeek promo:

- Combine codes with seasonal deals (Black Friday, New Year).

- Join the DeepSeek Partner Program for lifetime perks.

- Choose annual plans to stack multiple discounts.

- Use the free trial to evaluate the platform before committing.

Is DeepSeek Worth It in 2025? Honest Review

Pros:

- Affordable with promo codes

- Versatile across tasks

- Fast and accurate AI models

- Intuitive UI with quick onboarding

Cons:

- Fewer third-party integrations

- Less brand recognition

For most users in Tier 1 countries, especially freelancers and digital marketers, DeepSeek delivers value far beyond its price point.

Frequently Asked Questions

The most reliable source is DeepSeek’s official website or verified partner blogs.

For general AI tasks and budget users, DeepSeek offers a comparable experience at a lower cost.

Most codes are for first-time users only, though some seasonal deals are reusable.

Check the expiration date and spelling. If it fails, contact DeepSeek support.

Yes, DeepSeek offers a 7-day refund window for Pro and Enterprise plans.

Absolutely. Business accounts are eligible for all public promo offers.

Conclusion: Grab Your DeepSeek Promo Code Before It Expires!

The demand for powerful, affordable AI tools is skyrocketing in 2025, and DeepSeek is at the forefront. With the DeepSeek Promo Code 2025, you’re not just saving money—you’re gaining a serious productivity boost.

Take advantage of these limited-time discounts and stay ahead of the competition.